Robots.txt may be a simple text file, but it speaks volumes to search engines. It tells search engines like Google what pages they can and cannot access. But sometimes, we get an error like “Blocked by Robots.txt.” Well, don’t be frightened by this error. In this article, we will sort out two important errors related to Robots.txt.

“In the world of SEO, robots.txt is the silent gatekeeper of your site’s story.”

What exactly is Robots.txt?

Think of Robots.txt as instructions for search engines or “bots.” When a search engine, like Googlebot, arrives at your website, it first checks the robots.txt file to see which pages it should crawl or skip.

For example, you might want certain pages hidden from search engines, like private content or specific product pages that are only for members. By using a robots.txt file, you can block these pages from being visible in search results.

How Robots.txt Affects SEO

Your robots.txt file plays an important role in SEO. It helps control what pages are indexed, meaning which pages appear in Google search results. Blocking pages you don’t want search engines to access keeps your site focused on only showing important pages in search results. When set up correctly, robots.txt can help boost traffic to your site by only allowing high-value pages to appear in search.

However, incorrect configuration can harm SEO. If the robots.txt file blocks key pages, it could hurt your site’s visibility and even lower your site’s rank in Google.

Common Directives in Robots.txt

The robots.txt file works using specific rules, also known as directives, to manage what search engines see. Here are a few:

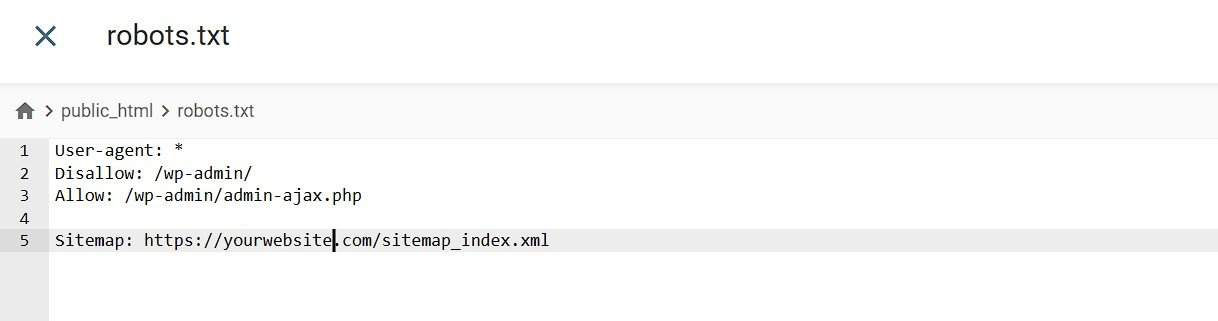

- User-agent: This tells the robots.txt file which search engine or bot should follow the rule.

- Disallow: This prevents bots from crawling certain pages or URLs. For example, Disallow: /private/ blocks all URLs in the “private” folder.

- Allow: This is used to permit specific URLs to be crawled, even if a disallow rule exists for similar paths.

Syntax is important here. Incorrect syntax in your robots.txt file can lead to pages getting blocked by accident or search engines ignoring your rules altogether.

Blocked by Robots.txt Error in Google Search Console

A “blocked by robots.txt” error message in Google Search Console means Google has found pages on your site but cannot access them due to your robots.txt settings. When this happens, it affects page indexing, meaning Google might not show these pages in search results, even if you want them to appear.

This issue can happen when:

- You accidentally block important pages with the disallow directive.

- Pages that should be visible in search results are hidden.

- Google found links to pages but cannot access them due to robots.txt rules.

How to Find Robots.txt in Google Search Console

Google Search Console (GSC) is a free tool that helps you monitor your site’s performance in Google. Here’s how to use Google Search Console to check for robots.txt issues:

- Set up Google Search Console (GSC): First, verify your site on Google Search Console. It’s a quick process that connects your site to GSC.

- Go To Pages Tab of GSC: In the Pages Tab, scroll down and check “Why pages aren’t indexed” section, you will find various reasons of non-indexing, including “Blocked by Robots.txt” error message.

Steps to Fix the Rule in Your Robots.txt

When you see a ‘blocked by robots.txt’ error, it indicates that Google cannot crawl the page because of robots.txt rules.

To fix errors in your robots.txt file:

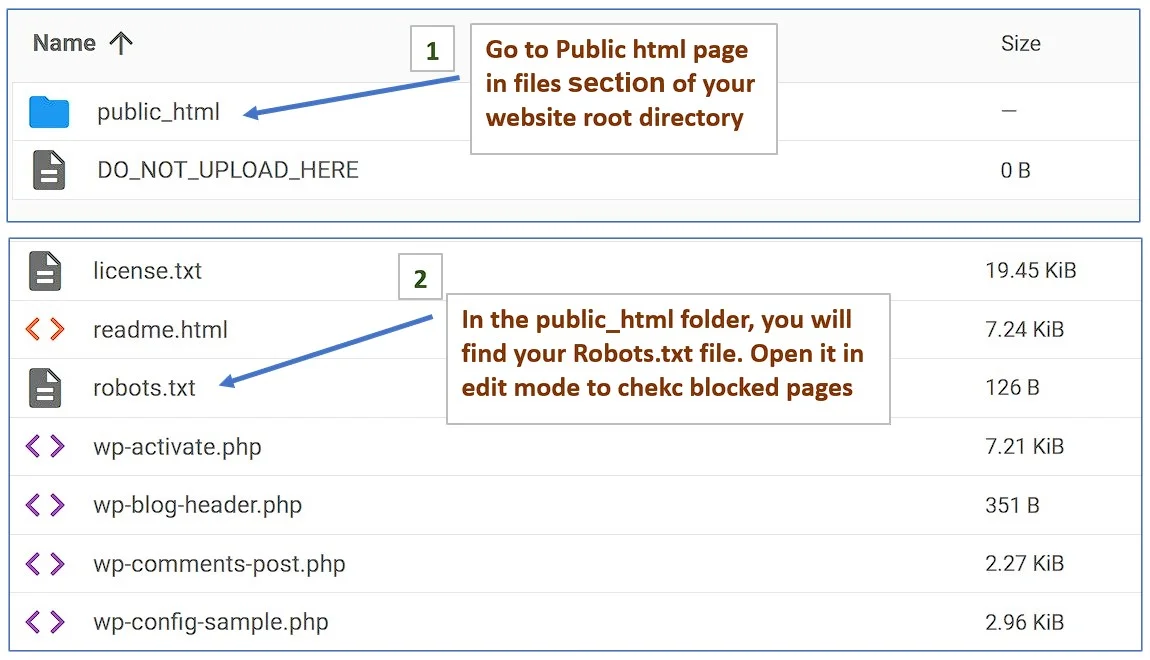

- Update Your Robots.txt File: Open your robots.txt file, located int the root directory of your website and check the URLs you want Google to index.

- Use Proper Syntax: Ensure each directive is correctly written to avoid errors.

- Test with the Robots.txt Tester: Use Google’s testing tool to confirm your changes.

Disallow Directive Explained

The disallow directive in your robots.txt file tells bots which parts of your site to ignore. For example:

- Disallow: /wp-admin/: This will block any URL in the “wp-admin” folder. Normally, wp-admin is disallowed to prevent indexing of unnecessary index of admin pages.

The disallow directive is the best way to block certain pages. However, if you want certain pages removed from Google’s index, using a “no index” tag is better.

Example of usage of Disallow in a robots.txt file:

Fixing Pages Indexed Though Blocked by Robots.txt

If your GSC shows pages that Google has indexed pages but still shows the error “indexed though blocked by Robots.txt”. It means, Google’s crawler could not crawl your page but still page has been indexed by Google due to higher PageRank or your page getting a large number of hits, so Google had to index your page.

To fix this error:

- Change the robots.txt File: Edit your robots.txt to only block URLs you don’t want in search. Unblock those pages that you want them to be indexed. It is quite possible that inadvertently, you have disallowed some pages of your website in the robots.txt.

- Request Google to Index: After, editing the file, use the URL Inspection Tool in your Search Console to request the indexing of specific pages.

Best Practices for Robots.txt in WordPress

If you’re using WordPress, you can access your robots.txt file through the WordPress Dashboard or use SEO plugins. Here are some tips:

- Avoid blocking JavaScript and CSS files.

- Make sure only necessary pages are blocked.

- Robots.txt Ideal settings: For most websites, ideal settings include: (a) Blocking admin areas like /wp-admin/ and (b) Allowing important pages for crawling.

Using SEO Plugins like Yoast SEO

SEO plugins like Yoast SEO allow you to edit your robots.txt file without touching code.

You can add or remove directives directly in the plugin’s interface, which is very helpful for WordPress users.

Ensuring Proper Configuration for Googlebot

To prevent Googlebot from crawling blocked pages, set your robots.txt correctly. For key pages that you want to index in search results, allow them in the robots.txt. If your page is blocked by robots.txt, it won’t appear in search results and which will reduce your traffic and revenue.

Final Thoughts

Setting up your robots.txt correctly is crucial for SEO. A well-optimized robots.txt file means that the right pages are indexed and visible in search results, leading to more traffic and better site performance.

Key Takeaway Points

- Use Google Search Console to monitor and fix any blocked by robots.txt errors.

- Keep your robots.txt file updated to maintain the right settings for SEO.

- Edit your robots.txt file as your site grows to control indexing.

Want to learn more fine points of technical SEO then check this article – How to make a Sitemap

FAQ

Ans: To fix a robots.txt problem, use Google’s robots.txt testing tool, review your file syntax, and ensure that important pages are not blocked.

Ans: The disallow directive in a robots.txt file tells search engines which pages they should ignore.

Ans: A robots.txt file is used to control which pages search engines can access and index on your site.

Ans: To access robots.txt, type the site URL with /robots.txt at the end (e.g., example.com/robots.txt). This file is located in the root directory of your website.

Ans: In Shopify, use the admin settings or work with their support to adjust the robots.txt file for blocked pages.